Ship Agents to Production.

Build, test, and monitor AI agents. Git-powered. Try it for free.

Seamlessly integrate with your existing stack

Works with the tools you already use. No vendor lock-in.

What makes AgentMark different?

Feature | Traditional LLM Platforms | |

|---|---|---|

Git Version ControlOwn your prompts, evals, and datasets in your own GitHub repository. | ||

Local and Hosted CollaborationDevelopers can work locally while domain experts can collaborate on our hosted platform. | ||

Type SafetyTreat prompts as functions with end-to-end type safety. | ||

Developer-Friendly FormatsSave prompts/datasets readable as Markdown + JSX and datasets as JSONL. |

Dev-friendly you say? Show me.

---

name: agentmark-demo

text_config:

model_name: gpt-4o-mini

test_settings:

props:

userMessage: "What are the reasons developers love AgentMark prompts?"

reasons:

- "Readable markdown and JSX"

- "Syntax highlighting"

- "Local development"

- "Full Type-Safety both within templates and your codebase"

- "We integrate with your favorite AI code editor(s)"

- "We adapt to any SDK you want (i.e. Vercel, Langchain, etc)"

---

<System>

You are a helpful customer support agent for AgentMark. Here are the reasons AgentMark is great.

<ForEach arr={props.reasons}>

{(reason) => (

<>

- {reason}

</>

)}

</ForEach>

</System>

<User>

{props.userMessage}

</User>

Create me a basic customer support agent for AgentMark.

Boost developer productivity

Take advantage of the latest AI dev tooling to enhance your productivity. Easily create synthetic data, update & create prompts, or customize evals all from your code editor.

Seamlessly integrate new models

Instantly pull new models into your studio using our CLI. Only add the providers & models you care about, without overwhelming non-technical users.

Explore documentationGraduate from prototype to production

AgentMark provides everything you need to ship production-ready LLM applications.

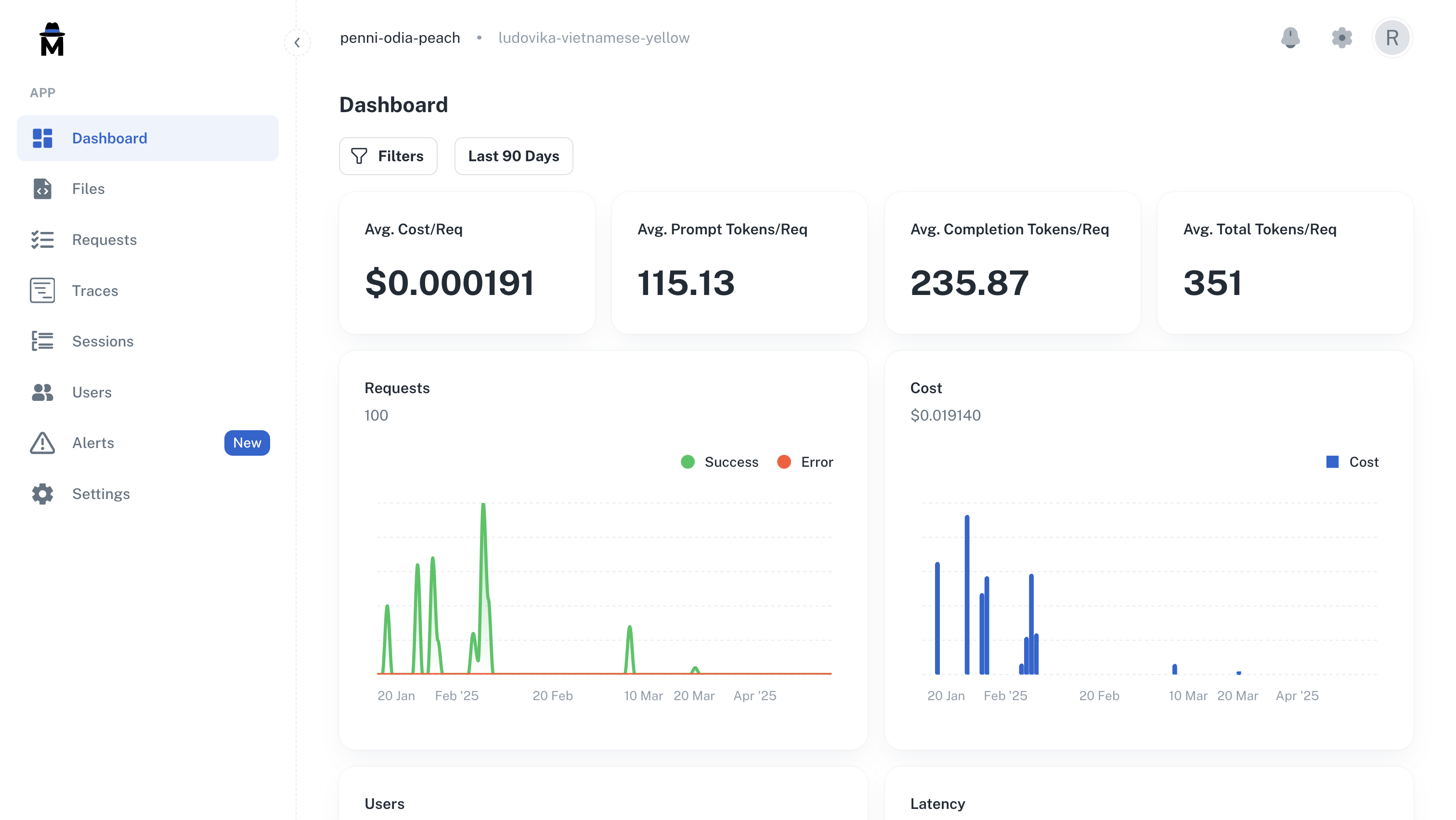

Metrics

Gain comprehensive visibility into your LLM applications with powerful analytics.

- Track cost, latency, and quality metrics

- Monitor error rates and identify issues quickly

- Analyze request volume and token usage trends

AgentMark is, by far, the best prompt representation layer of this new stack. You're the only people I've seen that take actual developer needs seriously in this regard.

Dominic Vinyard

Founding Designer

Frequently Asked Questions

Start shipping reliable agents today.

Get production-ready in minutes. Free to start, no credit card required.