Close the Agent Reliability Loop.

AI agents work in demos. Reliable behavior in production is the hard part. AgentMark gives them the same reliability loop your code already has: prompts, datasets and evals in git, testing in CI, traces in your OTel stack, alerts when things go wrong.

Your agents are degrading right now.

Most teams find out from users, not dashboards. Here's what that looks like.

Silent regression

−23%

response quality

Prompt shipped Monday. Response quality dropped 23%. No alert fired. A user complained on Friday.

Caught 4 days later

Runaway loop

$47,000

total damage

Two agents got stuck coordinating. Week 1 cost $127. Week 4 cost $18,400. The team mistook the spike for user growth.

Caught on the invoice

Fluent failure

200 OK

HTTP status

Agent called a tool with a wrong parameter. Database returned zero rows. The agent told the user: "I couldn't find any data." Every dashboard stayed green.

Never caught

The fix is the same one that works for code: evals, observability, alerts, and version control.

That's AgentMark.

The reliability loop your agents are missing.

Your code has had this loop for years. Your agents haven't — until now.

git commit

Edit your Agent, dataset, or eval in your repo

Prompts + DatasetsCI runs evals

Regressions block the PR — not your users

Evaluations in CIgit merge

Green evals gate every deploy to main

Git-native deploydiagnose + fix

Diagnose the trace, add the test case & fix

Back to step 01alert fires

Quality, cost, or latency crosses your threshold

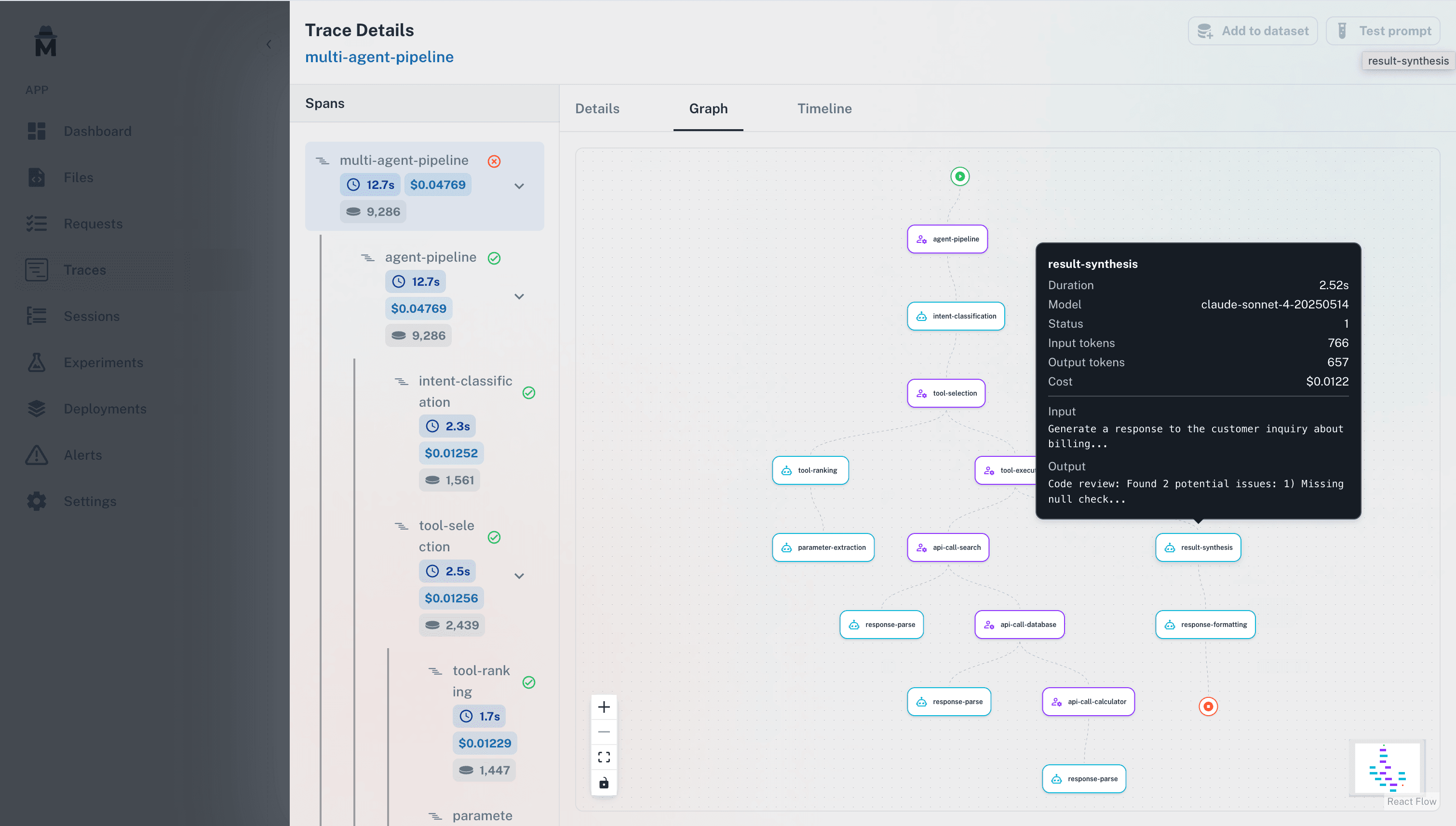

AlertsOTel traces

Every span, token, and tool call captured

Tracing + MetricsAgentMark catches regressions before deploy with evals in CI, then catches what slips through with OTel and alerts.

git commit

Edit your Agent, dataset, or eval in your repo

Prompts + DatasetsCI runs evals

Regressions block the PR — not your users

Evaluations in CIgit merge

Green evals gate every deploy to main

Git-native deployOTel traces

Every span, token, and tool call captured

Tracing + Metricsalert fires

Quality, cost, or latency crosses your threshold

Alertsdiagnose + fix

Diagnose the trace, add the test case & fix

Back to step 01AgentMark catches regressions before deploy with evals in CI, then catches what slips through with OTel and alerts.

Catch problems before your users do.

Metrics, traces, prompts, datasets, evals, experiments, and alerts — all connected, all in your repo.

Metrics

Know your cost, latency, and error rate before a user complains — not after.

- Know your cost per request and error rate before a user complains

- Track latency and quality trends across model versions and prompt changes

- Correlate token usage spikes with specific prompt or code changes

“AgentMark is, by far, the best agent representation layer of this new stack. You're the only people I've seen that take actual developer needs seriously in this regard.”

Dominic Vinyard

Founding AI Designer

San Francisco, CA

Works with your entire stack.

No proprietary SDKs. Standard OpenTelemetry for traces, git for version control, and direct support for every major model and framework.

Works in your existing editor.

Prompts, datasets, and evals are just files. Your AI assistant can read, write, and refactor them like any other code.

Prompts live in your codebase.

Your AI editor gets hooked up to AgentMark docs via MCP. Ask Claude Code or Cursor to generate a prompt, update parameters, or refactor your system message — no context switch needed.

Your data, your repo, your standards.

Closed platforms own your data and lock you into their SDKs. AgentMark doesn't.

Questions

Ready to ship agents you can trust?

One command. Connected in minutes. No credit card required.